Conversational BI with Local LLM: How to Talk to Your Database?

A practical and straightforward approach to upgrading your BI: combine traditional dashboards with a chatbot that understands natural language and queries your database in real time. Learn how to build this solution using open-source LLMs running locally.

The real-world problem

Everyone who’s ever sat in a meeting with a manager knows this: the dashboard is never perfect. We add KPIs, pie charts, colors, filters — and the next day someone asks:

“Okay, but what if I want to see only physical channel sales, ordered by average ticket size, excluding returns?”

- The dev team sighs — someone has to edit queries, pipelines, and frontend logic.

- The manager sighs — they’ll either wait a sprint or hack something in Excel.

That’s when, in a chat between tech and business folks, someone asked:

“What if the dashboard stays put, but we plug in a chat that understands SQL behind it and answers any question that doesn’t fit into a chart?”

Spoiler: it works, it’s cheap (or even free), and it can run on your laptop.

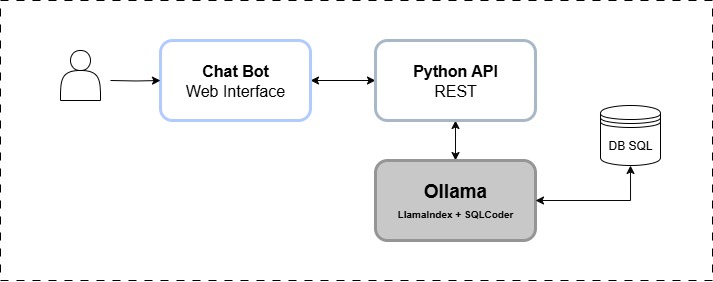

The approach in a nutshell

- The static dashboard is still great for trends and visual alerts.

- The conversational chatbot handles ad-hoc analysis: natural language → SQL → answer.

If you’ve suffered from monolithic BI platforms, relax — this adds intelligence without breaking anything.

The stack we tested

| Component | What it does | Runs locally? |

|---|---|---|

| LlamaIndex | Orchestrates question ↔ schema ↔ LLM | ✅ (via pip / python) |

| SQLCoder‑7B/15B | Generates SQL from natural questions | ✅ via Ollama |

| OllamaEmbedding + nomic‑embed-text |

Embeds context between question and schema |

✅ via Ollama |

| Frontend | Chat bot (optional but nice) | ✅ React |

| Database | Where your data lives | ✅ SQLite/Postgres |

💡 No server? You can swap SQLCoder for gpt-4o-mini and keep local embeddings. Pay only for tough questions.

So, what is SQLCoder?

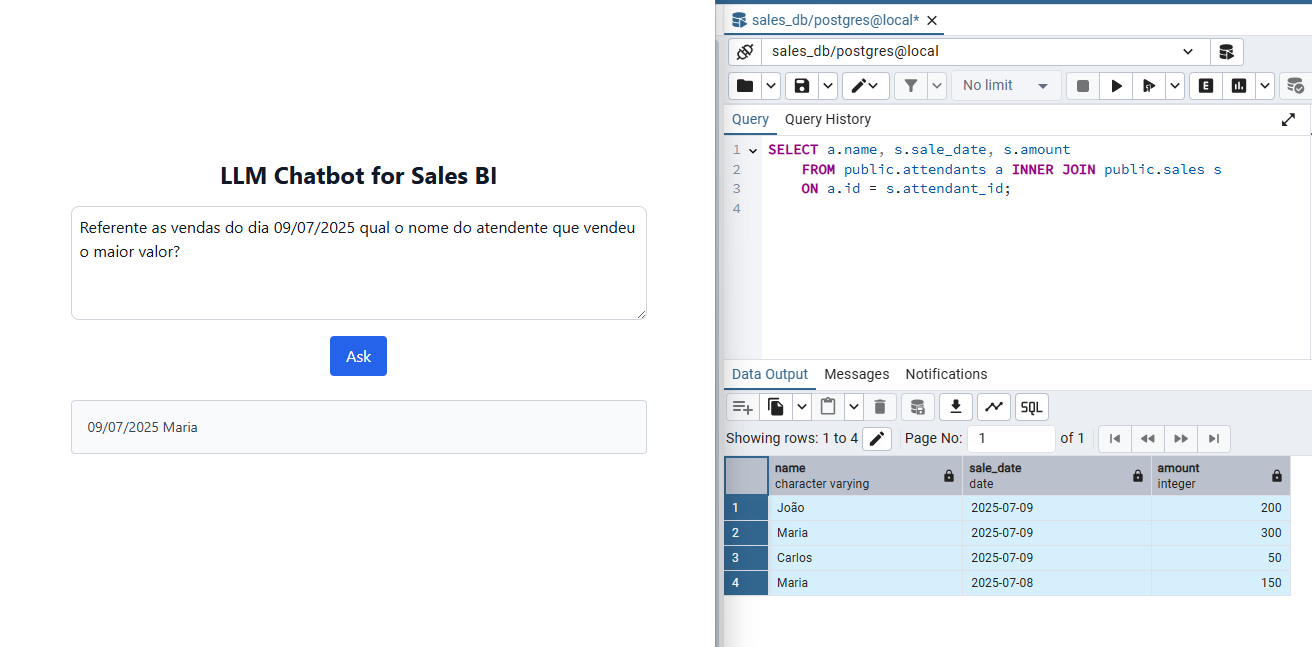

SQLCoder is an open-source LLM trained to generate valid, coherent SQL from natural language questions. It understands things like:

- “Who sold the most today?”

- “Which product had the highest average ticket this month?”

- “Which customers haven't purchased in the last 30 days?”

And outputs clean, standard SQL. Even better: you can run it locally using Ollama.

Behind the scenes: how the magic happens

LlamaIndex’s NLSQLTableQueryEngine does the heavy lifting. Here's what we give it:

- User question (e.g., “Who sold the least today?”)

- Table descriptions (e.g.,

sales= value, date, attendant) - Few-shot examples (real manager questions with SQL)

The LLM takes it all in, figures it out, and returns a valid query.

Improving accuracy (it's very important)

- ✅ System prompt: “Use ORDER BY + LIMIT 1 for top/bottom.”

- ✅ Few-shot: Add 5–10 real manager questions and their ideal SQL.

- ✅ SQL views: e.g.,

daily_sales,monthly_rankingsimplify logic. - ✅ Fallback: Try GPT-4o only when SQLCoder fails.

Challenges we faced

| Issue | How we solved it |

|---|---|

| First response is slow | Set request_timeout=180 on embedding and LLM |

| SQL missing GROUP BY | Post-processing injects missing clauses |

| Sensitive columns | Only expose views with anonymized data |

| Ambiguous questions | Improve schema description + use more examples |

Pros, cons, and what’s next

✔️ Pros

- No more bottlenecks in IT for “simple requests.”

- Reduces dependency on heavy BI platforms for casual users.

- Dashboards stay useful for visual alerts and KPIs.

- Runs offline, on your laptop — but you’ll need decent RAM to make it viable.

❌ Cons

- LLM may fail if your schema is messy or poorly described.

- Latency is higher than clicking a dashboard (but still reasonable).

- Security matters: run in read-only mode and always validate output.

🤔 What about OpenAI?

You can use OpenAI instead of a local model. Just switch to OpenAI(model="gpt-4") in the code (it's very interesting).

- ✔️ Handles more complex questions better

- ✔️ High-quality embeddings

- 🚩 Paid API (token-based billing)

- 🚩 Requires internet and an API key

- 🚩 Potential data privacy concerns

Want to try it?

I created a simple yet functional codebase with a UI so you can explore this approach hands-on.

- docker-compose for PostgreSQL, pgAdmin, and Ollama

- FastAPI backend using LlamaIndex and SQLCoder

- React + Tailwind frontend with a connected chat

To run it:

docker compose up -dThen launch the backend and frontend locally — done!

👉 Repo: betonr/python-llm-sql

✨ Final thoughts

This article doesn’t deliver a final solution — but it shows a practical and viable path: exploring data using natural language, without relying solely on dashboards or overloading the IT team.

You can keep your existing charts and simply add a smart conversational layer to handle the unexpected questions.

Where you can take it next:

- Add filters and business rules per department

- Show on-demand charts based on SQL answers

- Customize responses based on user roles

- Log questions to improve the model over time

If this makes sense for your team, it’s worth trying. It runs locally, it’s simple to set up — and it can change how you interact with your data.